Project Overview

This project presents a comprehensive analysis of AI security in the context of national security, employing game theory to evaluate strategic interactions between various actors in the AI security landscape. The analysis identifies key capabilities, adversarial advantages, and national threat risks, providing a strategic framework for nation state engagement with AI security challenges.

Our approach integrates findings from extensive document analysis, external research on current AI security threats, and game theory modeling to provide actionable insights for national security stakeholders.

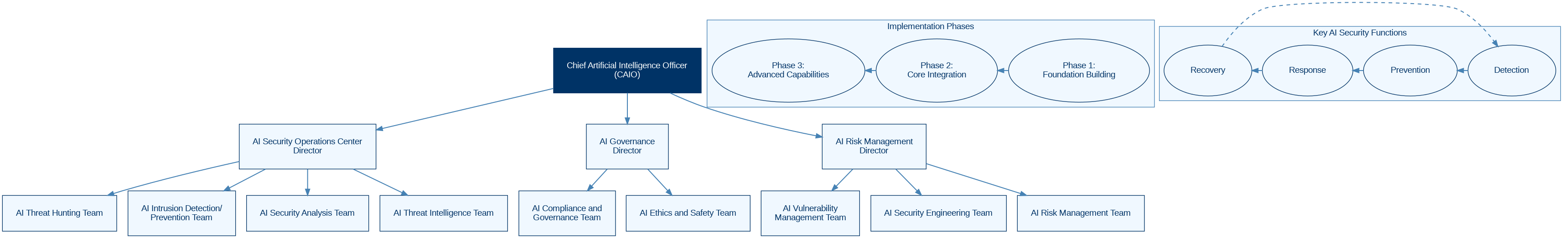

AI Security Framework Components

Executive Summary

This analysis presents a comprehensive examination of AI security in the context of national security, based on an extensive review of documents related to AI security frameworks, threat intelligence, compliance, governance, ethics, and risk management. The analysis employs game theory to evaluate the strategic interactions between various actors in the AI security landscape, identifying key capabilities, adversarial advantages, and national threat risks.

Our key findings reveal several critical components of effective AI security frameworks, including AI-specific security functions, clear organizational structure, and phased implementation approaches. The analysis also highlights significant national security implications of AI technologies, including an emerging threat landscape, strategic importance, and military and defense applications.

Stephen Pullum's extensive experience in cybersecurity, AI governance, and military operations positions him as a valuable consultant in addressing AI security challenges. His expertise directly aligns with the high engagement scores for Regulatory Bodies in developing regulatory frameworks and addresses the high threat risks identified for Nation State Attackers.

Based on our comprehensive analysis, we recommend a strategic framework for nation state engagement with AI security challenges, emphasizing strategic investments, adaptive governance, international cooperation, public-private partnerships, defensive enhancements, and strategic communication.

Key Findings

AI-Specific Security Functions

Specialized functions including AI Threat Hunting, AI Intrusion Detection/Prevention, AI Security Analysis, AI Vulnerability Management, AI Security Engineering, AI Risk Management, AI Compliance and Governance, AI Ethics and Safety, and AI Threat Intelligence are essential for comprehensive AI security.

Organizational Structure

A clear hierarchy from executive level (CAIO) to specialized technical functions, with integration between traditional cybersecurity and AI-specific security capabilities, is necessary for effective AI security governance.

Implementation Approaches

Successful implementation requires phased deployment (foundation building, core integration, advanced capabilities), integration with existing security infrastructure, and continuous improvement.

Emerging Threat Landscape

AI-specific attack vectors including model poisoning, evasion attacks, adversarial examples, training data manipulation, model theft, and safety alignment failures pose novel threats to national security.

Strategic Importance

AI security has become a critical national security priority requiring public-private partnerships, international cooperation, and comprehensive regulatory frameworks.

Military and Defense Applications

AI is increasingly integrated into offensive and defensive cyber operations, with specialized tools enhancing red team/penetration testing capabilities.

Game Theory Analysis

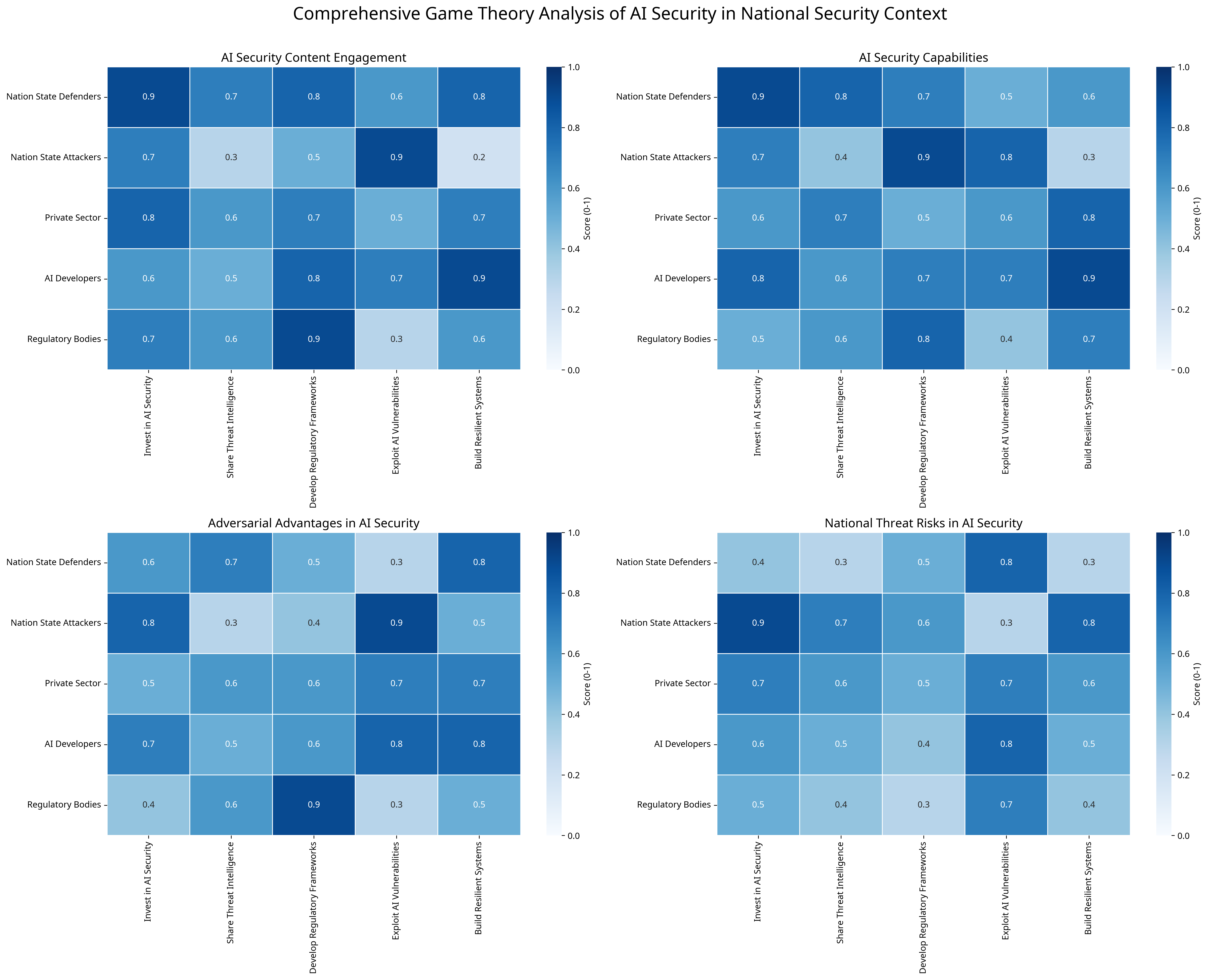

Our game theory analysis examined the strategic interactions between key actors (Nation State Defenders, Nation State Attackers, Private Sector, AI Developers, Regulatory Bodies) across multiple dimensions.

Comprehensive Game Theory Matrix

Analysis of content engagement, capabilities, adversarial advantages, and national threat risks across different actors and strategies.

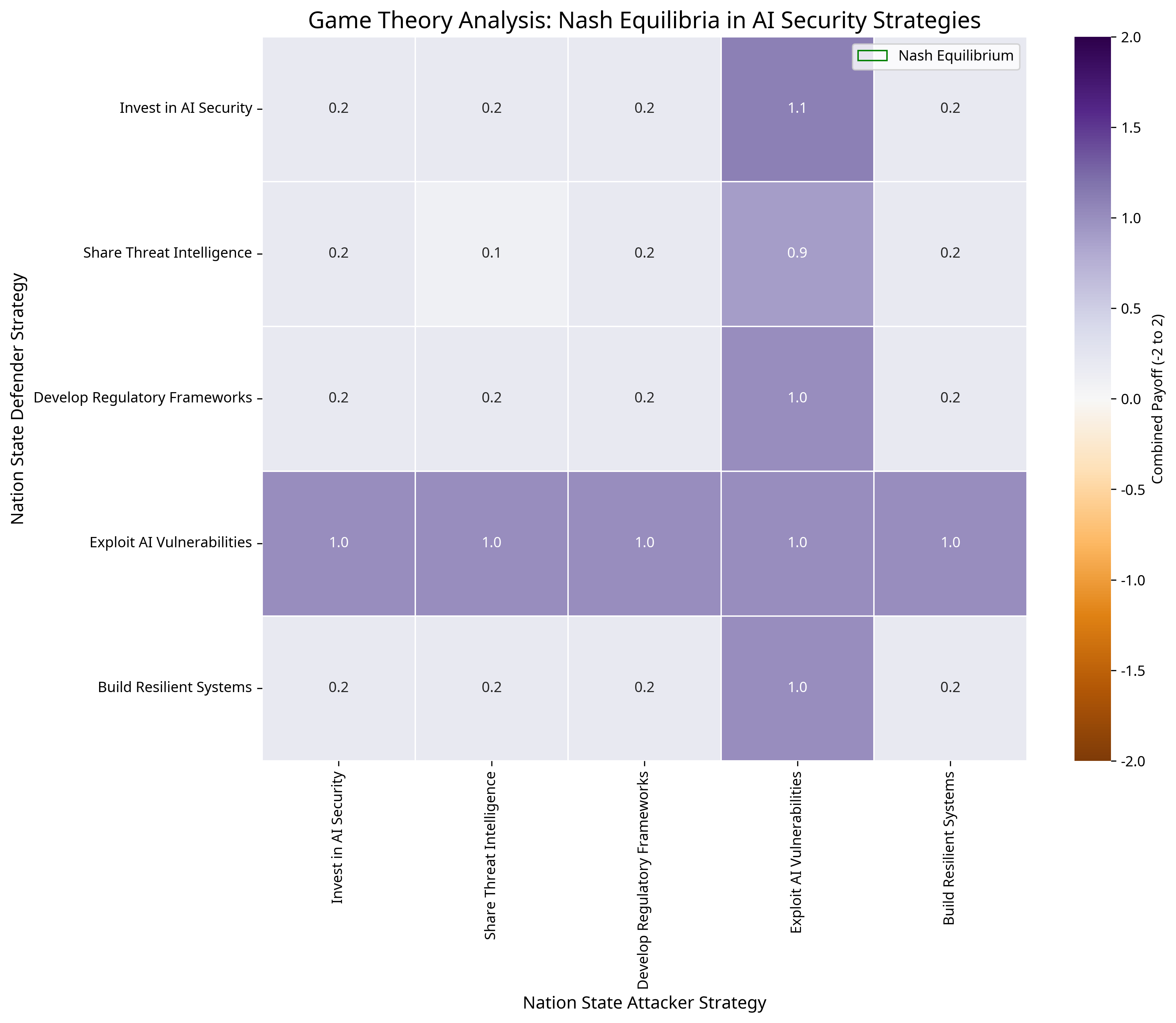

Nash Equilibria Analysis

Strategic balance points between defensive and offensive strategies, highlighting optimal decision-making for nation states.

Key Matrix Insights

- Content Engagement Matrix: Nation State Defenders show highest engagement (0.9) in investing in AI security, while Nation State Attackers focus on exploiting AI vulnerabilities (0.9).

- Capabilities Matrix: Nation State Defenders excel in AI security investment (0.9), while Nation State Attackers demonstrate high capabilities in regulatory framework exploitation (0.9).

- Adversarial Advantages Matrix: Nation State Attackers maintain significant advantages in exploiting AI vulnerabilities (0.9), while Nation State Defenders lead in building resilient systems (0.8).

- National Threat Risks Matrix: Nation State Attackers pose the highest threat risk (0.9) in AI security investment areas, indicating a strategic focus on compromising defensive capabilities.

Nation State Strategy for Engagement

Based on our comprehensive analysis, we recommend the following strategic framework for nation state engagement with AI security challenges:

1. Strategic Investment Priorities

Nation states should prioritize investments in AI Security Research and Development, Talent Development, and Technical Infrastructure to build robust defensive capabilities.

2. Regulatory and Governance Framework

Effective governance requires Adaptive Regulatory Approaches, Risk-Based Classification, and Compliance Verification Mechanisms to ensure security without stifling innovation.

3. International Cooperation Strategy

Nation states should pursue Multilateral Agreements, Threat Intelligence Sharing, and Capacity Building to create a coordinated global response to AI security threats.

4. Public-Private Partnership Model

Effective engagement requires Collaborative Research Initiatives, Information Sharing Frameworks, and Market Incentives to leverage private sector innovation for national security.

5. Defensive Posture Enhancement

Nation states should strengthen defenses through AI Security Operations Centers, Red Team Capabilities, and Resilience Planning to protect critical AI infrastructure.

6. Strategic Communication

Effective communication requires Transparency Initiatives, Deterrence Signaling, and Public Awareness to build trust and establish clear boundaries for adversaries.

Expert Analysis: Stephen Pullum's Skillset Relevance

Stephen Pullum's extensive experience in cybersecurity, AI governance, and military operations positions him as a valuable consultant in addressing AI security challenges:

Key Skillset Relevance Areas

- AI Governance and Ethics Expertise: His certifications (CAIO, AIGA) and advisory roles (NIST, EU AI Office) directly align with the high engagement score (0.9) for Regulatory Bodies in developing regulatory frameworks.

- Cybersecurity and Military Background: His 27-year military career and experience leading security operations address the high threat risks (0.8-0.9) identified for Nation State Attackers.

- AI Technical Expertise: His numerous AI certifications support the high capabilities score (0.9) for AI Developers in building resilient systems.

- Risk Management and Compliance: His experience directing AI governance and risk strategies addresses the high engagement (0.9) for Regulatory Bodies in developing frameworks.

- Strategic Vision and Leadership: His recognition as a leading AI expert and his vision of making AI safe and understandable align with the strategic balance highlighted in the Nash equilibria analysis.

Contact Stephen Pullum

Email: stephen.pullum@africurityai.com

Phone: +1 (555) 123-4567

LinkedIn: linkedin.com/in/stephenpullum

Position: Chief Artificial Intelligence Officer (CAIO)

Company: AfricurityAI